Chapter 1:i-Net Basics

Management

and Control of the Internet

Evolution

of the World Wide Web

Exercise

1-1: Converting Domain Names and IP Addresses

Certification

Objectives

|

|

Introduction to the Internet |

|

|

URL Components and Functions |

|

|

Types of URLs |

|

|

Internet Site Performance and Reliability |

This chapter

provides you with an introduction and overview of the Internet. You will learn

about the origins and evolution of the Internet and the emergence of internal

private networks called intranets.

You will be familiarized with underlying Internet technologies including the

World Wide Web, Web browsers, Web servers, and Uniform Resource Locators (URL).

You are also

going to become aware of the issues that affect Internet / intranet / extranet

site functionality and performance, including bandwidth constraints, customer

and user connectivity, and connection access points and speed. You will see how

e-business leverages these technologies by linking organizations to their

supply chain, including business partners, suppliers, distributors, customers,

and end users using extranets and the Internet.

Introduction to the

Internet

The Internet is

perhaps best described as the world’s largest Interconnected Network

of networks. There is no centralized control of the Internet; instead, many

millions of individual networks and computers are interconnected throughout the

world to communicate with each other. The Internet is not only technology, it’s

a global community of people, including corporations, nonprofit organizations,

educational institutions, and individuals.

The early roots

of the Internet can be traced back to the Advanced

Research Projects Agency (ARPA). ARPA is an agency within the United States

Federal Government formed by the Eisenhower administration in 1957 with the

purpose of conducting scientific research and developing advanced technologies

for the U.S. military.

One of ARPA’s

research areas was developing a large-scale computer network that could survive

a serious nuclear attack. This network came to be known as ARPAnet. ARPAnet was designed to insure reliable communications

between individual computers (nodes), even in the event of failures between

connecting computer networks. The architecture of ARPAnet provided the

foundation for the Internet as we know it today.

The Internet was

originally comprised almost exclusively of government research centers and

universities within the United States. Today, the Internet continues to expand

internationally as it is commercialized. The major obstacle to further growth

in underdeveloped countries is the lack of a reliable telecommunications

infrastructure. In third-world nations and parts of Eastern Europe, modern

telephone systems are typically not available.

History and

Evolution of the Internet

Different

organizations have been involved in the development of the Internet, including

the United States Federal Government, academic organizations, and industry. The

Internet has gone through many stages of technology development. This timeline

highlights the major events that led to its development.

|

|

1962 The report On Distributed Communications Networks is published by the Rand Corporation. It proposes a computer network model in which there is no central command or control structure, and all nodes are able to reestablish contact in the event of an attack on any single node. |

|

|

1969 The Department of Defense (DoD) commissions ARPA for research into computer networks. The first node on this network is at the University of California at Los Angeles (UCLA). Other computers on the network are at the Stanford Research Institute, the University of California at Santa Barbara, and the University of Utah. |

|

|

1982 TCP/IP is established as the data transfer protocol for ARPAnet. This is one of the first times the term "Internet" is used. The DoD declares TCP/IP to be the standard for the U.S. military. |

|

|

1986 The National Science Foundation (NSF) creates NSFnet, which eventually replaces ARPAnet, and substantially increases the speed of communication over the Internet. |

|

|

1989 Tim Berners-Lee at CERN drafts a proposal for the World Wide Web. |

|

|

1990 ARPAnet is superseded by NSFnet. |

|

|

1991 Gopher is introduced by the University of Minnesota. |

|

|

1992 The World Wide Web (WWW) is born at CERN in Geneva, Switzerland. |

|

|

1993 The Mosaic World Wide Web browser is developed by the National Center for Supercomputing Applications (NCSA). Mosaic was the first Web browser with a graphical user interface. It was released initially for the UNIX computer platform, and later in 1993 for Macintosh and Windows computers. |

|

|

1994 The Netscape Navigator Web browser is introduced. The Web experiences phenomenal growth. |

|

|

1995 Sun introduces the Java programming language. Netscape Navigator 2.0 ships with support for Java applets. Navigator becomes the dominant Web browser. |

|

|

1996 Users in almost 150 countries around the world are now connected to the Internet. The number of computer hosts approaches 10 million. Commercial applications of the Internet explode |

|

|

1997 Intraconnected Networks or intranets are deployed based on Internet technologies. |

|

|

1998 Commercial applications of the Internet explode, including business-to-consumer |

|

|

e-commerce, e-auctions, and e-portals. |

|

|

1999 America Online (AOL) acquires Netscape and partners with Sun Microsystems. e-business applications explode to extend e-commerce to business-to-business extranets linking the supply chain, including customers, suppliers, business partners, and distributors together. |

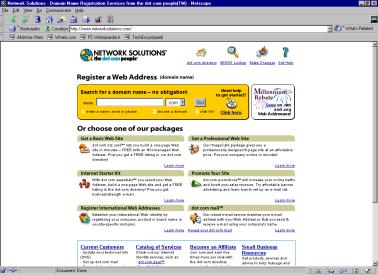

Management and

Control of the Internet

One of the most

frequently asked questions is, “who controls the Internet,” or “who runs the Internet?”

The best answer is no one and everyone. The roots of the Internet grew out of research sponsored by ARPA.

As the technology evolved, the NSF became involved in the expansion and

management of the Internet in 1984. Both NSF and ARPA were organizations funded

entirely, or in part, by the U.S. Government working closely with academic

institutions. The control and management of domain names was passed from NSF to

Network Solutions, Inc. Network Solutions had a monopoly on the distribution of

domain names until 1999 when the process was opened up to other companies.

Today, no single

organization, government, or nation controls the technology, content, or

standards of the Internet. Because the Internet fosters international

cooperation, it is often referred to as the global

community. International standards bodies, including the Internet

Engineering Task Force (IETF), the Internet Society (ISOC), and the World Wide

Web Consortium (W3C) are leading the process to develop international

standards.

Commercialization

of the Internet has contributed to its rapid expansion and constant evolution.

Most large corporations have an Internet presence in the form of a Web site or

e-commerce site. The use of intranets today is as common as the use of LANs was

in the 1980s. The exploding area linking business and technology for the new

millennium is the rapid deployment of e-business.

Evolution of the

World Wide Web

The idea for the

Web is attributed to Tim Berners-Lee of CERN, the European Laboratory for

Particle Physics in Geneva, Switzerland. In 1989, Berners-Lee conceived the

architecture for the Web as a multimedia

hypertext information system based on a client/server architecture.

Like the Internet, the Web is a

network composed of many smaller computer networks. Specialized

servers—referred to as Web servers—store and disseminate information, and Web

clients—referred to as Web browsers—download and display the information for

end users.

Web Browser Evolution

The

first-generation Web browser developed at CERN was character based. It was very

primitive by today’s standards and only capable of displaying text (e.g., Lynx

browser). It wasn’t until the Mosaic browser became available in 1993 that the

potential of the Web began to be realized.

Mosaic was

developed at the National Center for Supercomputing Applications (NCSA) by a

team of software engineers led by Marc Andreesson. Mosaic was the first

graphical browser to take advantage of the multimedia capabilities of the Web.

Equally important, Mosaic was cross-platform, allowing users to view the same

Web pages on Windows, Macintosh, and UNIX computer platforms.

In 1994,

Andreesson left NCSA and co-founded Netscape Communications Corporation in

Mountain View, California. In 1995, the Netscape Navigator Web browser quickly

became the most widely used cross-platform Web browser on the market. Netscape

integrated all of the features of Mosaic, and added many new features and

capabilities as well.

The

second-generation Netscape Navigator browser, version 2.0, was released for

general availability in February 1996. Many new capabilities were incorporated

into Netscape 2.0, including support for Java applets, Acrobat files, Shockwave

files, and built-in HTML authoring.

Components of the

Intranet

The Internet is

based on these fundamental technology components:

|

|

Internet Clients |

|

|

Internet Servers |

|

|

Communications Protocols |

Internet Clients and

Web Browsers

Internet clients

represent computer nodes or client software such as Web browsers, e-mail, FTP,

and newsgroup clients. When a client requests data from a server, information

is downloaded. Alternately, when a

client transfers data to a server, it is uploaded

as shown in the illustration below.

The computer and

network infrastructure underlying the WWW is the same as that of the Internet.

What differentiates the Web from the Internet is the multimedia capability

offered by Web browser and servers. A Web browser is a client application that

displays multimedia hypertext documents (Web pages). Examples of Web browsers

include Microsoft Internet Explorer and Netscape Navigator.

Exam Watch: Even

though the CompTIA i-Net+ exam is based on vendor-neutral standards, you should

be familiar with actual vendor implementations and products based on these

standards to do well on the exam. For example, when discussing browser

concepts, you should be familiar with the most recent versions of both the

Netscape and Microsoft Web browsers.

Internet Servers

A server (also called a host) is a computer or software application that makes available

(or serves the client) data and

files. Internet servers are available for the Web, Mail, News, and other

Internet services.

The purpose of a

Web server is to store and disseminate Web

pages, interact with the client browser, and process user transactions and

requests such as database queries. Web servers are available for all popular

computer platforms and operating systems, including Windows, Macintosh, and

UNIX. Essentially, a Web server is sitting and listening for a request from a

browser to download a document (Web page) residing on the server. The purpose

of the server is to “serve” documents to a client and interact with backend

systems such as databases and other servers

Web documents are

written using the HyperText Markup Language

(HTML). HTML is a Web-based standard

that describes how Web documents are displayed in a Web browser or thin client.

A thin client may be a Web browser, network computer, personal digital

assistant, or any device capable of displaying HTML. Since HTML is a

cross-platform language, the same Web pages can be viewed in various browsers

on Windows, Macintosh, and UNIX computer platforms. As newer types of thin

clients emerge, the Web will become a pervasive part of our lives.

A common

misconception about the WWW is that a thin client maintains a continuous

connection with the Web server. In fact, once the information is downloaded

from the server to the browser, the transaction is completed, and the

connection is terminated. The information in the browser is viewed without

remaining connected to the server. In order to download new information, a new

transaction is required.

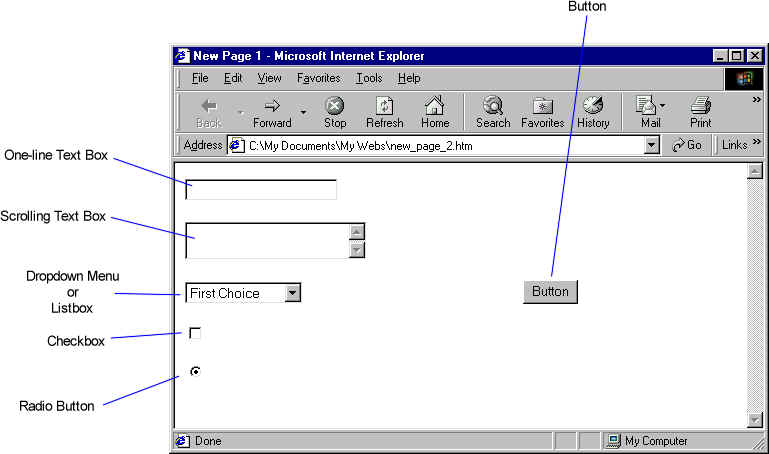

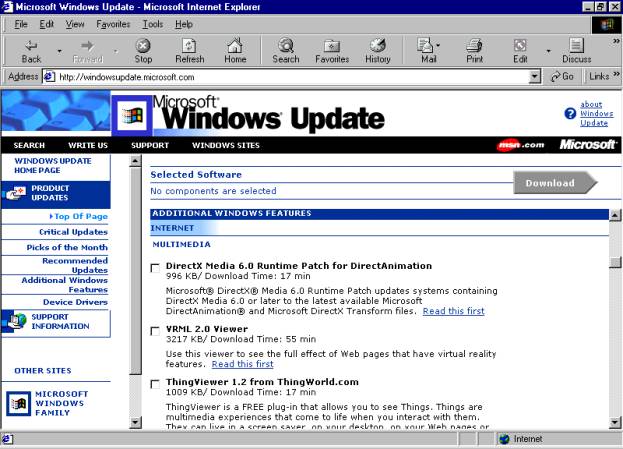

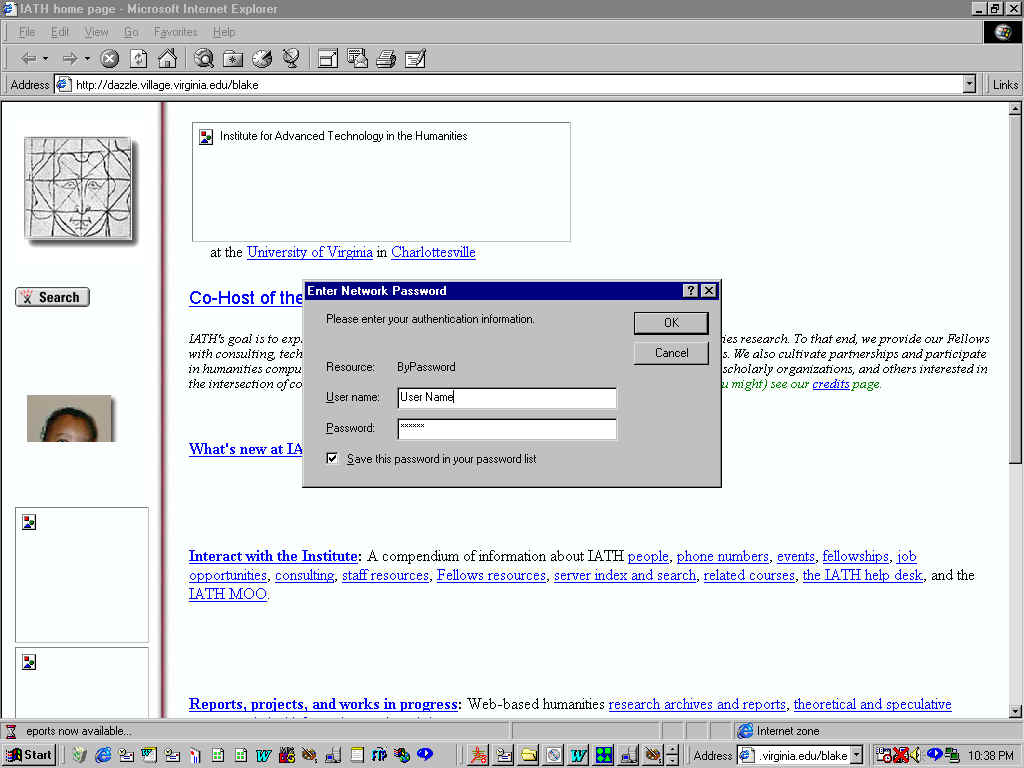

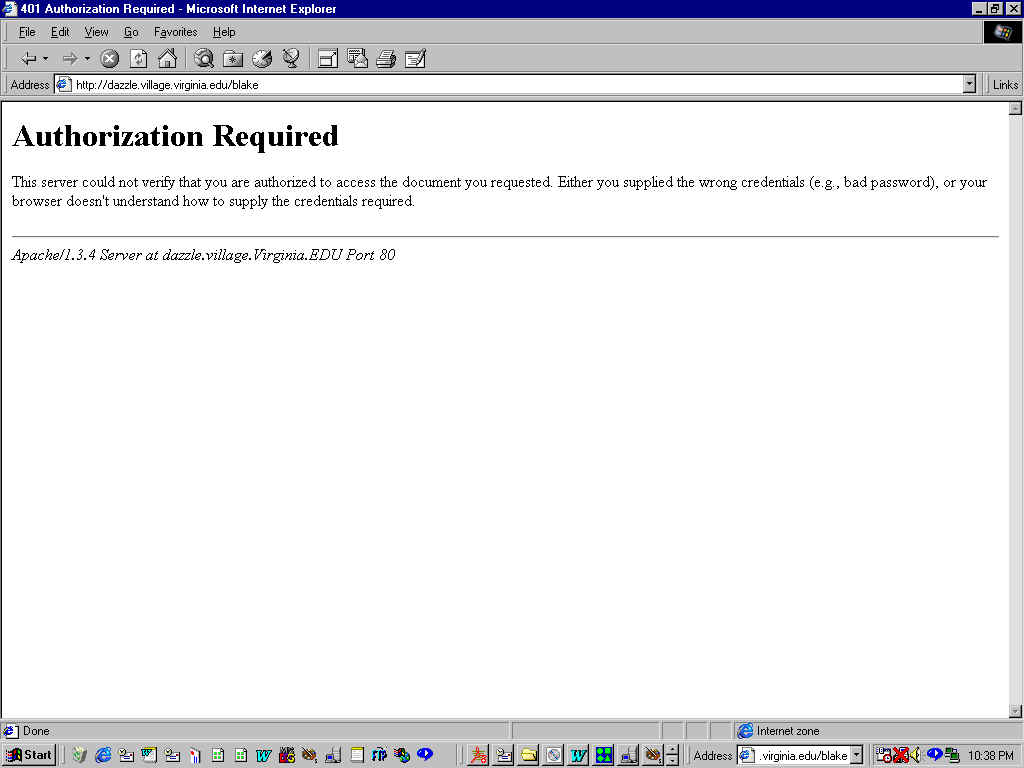

Figure 1-3 shows

the relationship between a Web browser and a Web server. A Web browser requests

a document by entering the address of the document by entering its Uniform Resource Locator otherwise known

as a URL or Web address. A connection is attempted between the client and the

server and, if successful, a document is downloaded from the server and viewed

in the browser.

Figure 1:

Interaction between a Web browser and Web server

For example,

consider connecting to the CompTIA Web site. To do this, the URL for CompTIA—http://www.comptia.org—is entered in

the browser location text field. The browser attempts to make a connection to

the CompTIA Web server. If the connection is successful, a Web page (HTML file)

is downloaded to the client browser that made the request as shown in Figure

1-1, and the connection is closed.

Intranets and

Extranets

Both intranet and

extranet technologies are based on the same open standards that make up the

Internet. Intranets are Intraconnected

Networks that are usually restricted

to internal access only by a

company’s employees and workers. Often times, an intranet is located behind a

firewall to prevent unauthorized access from a public network.

Extranets are

derived from the term External

Networks that connect an Internet

site or an Internet site to another Internet site using the Internet. In a

sense, it’s an extension of an Internet/intranet site to another site on the

Internet where information and resources may be shared. Common examples of

extranets are links between business partners that need to share information

such as sales data or inventories.

e-commerce and

e-business

The term e-commerce and e-business have become almost as pervasive as the use of Internet.

The “e” stands for electronic and is

used to separate the traditional use of terms like commerce, business, and mail

from the corresponding computer or Internet-based usage of these terms.

What exactly do

e-commerce and e-business mean? e-commerce is about selling products and

services over the Internet in a secure environment and is a subset of

e-business. e-business is about using Internet technologies to transform key

business processes to capitalize on new business opportunities, strengthen

relationships in the supply chain with customers, suppliers, business partners,

and distributors, and become more efficient and in the process more profitable.

The Internet, intranets, and extranets serve as the enabling e-business and

e-commerce technologies. You will learn about this in later chapters.

Internet Protocols

The Internet is

inherently a multivendor computing environment composed of computers from many

manufacturers using various network devices, operating systems, languages,

platforms, and software programs. In order for this diverse array of hardware

and software components to interoperate (or connect and work) with each other,

there must be a standard method or language of communication. This language is

referred to as a protocol.

The Internet is

based on scores of protocols that support each of the types of services and

technologies deployed on the Internet. The basic suite of protocols that allows

this mix of hardware and software devices to work together is called TCP/IP.

TCP/IP

Transmission

Control Protocol/Internet Protocol. TCP/IP became the Internet’s

standard data transfer protocol in 1982 and is the common protocol (or language) that allows communication

between different hardware platforms, operating systems, and software

applications. TCP/IP is a packet switching system that encapsulates data

transferred over the Internet into digital “packets.”

It is important

to understand that clients, servers, and network devices on the Internet must

be running the TCP/IP protocol. This is true for Windows, Macintosh, and UNIX

computer platforms.

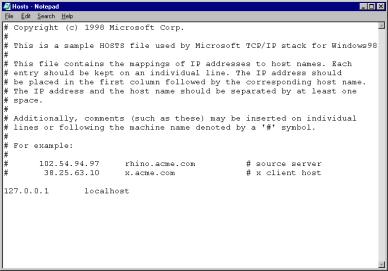

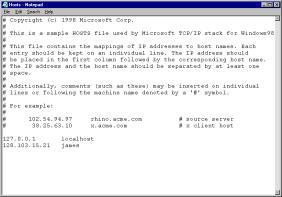

On

a Windows-based client, the TCP/IP protocol is implemented through a software device driver sometimes referred to as

the TCP/IP “stack.” In Windows 95/98 and Windows NT, the TCP/IP stack is built

into the operating system.

HTTP

HTTP stands for HyperText Transfer Protocol. The HTTP protocol operates

together with the TCP/IP protocol to facilitate the transfer of data in the

form of text, images, audio, video, and animation.

Internet Services

The Internet is a

combination of many types of services, and each has it own associated protocol.

It has evolved from a time when only text-based files and e-mail could be

transferred from one computer to another. The most common Internet services

are:

|

|

e-mail-Based on Post Office Protocol (POP) and Simple Mail Transfer Protocol (SMTP). |

|

|

File Transfer-Based on the File Transfer Protocol (FTP) and is used to transfer ASCII and binary files across a TCP/IP network |

|

|

Newsgroups-Network News Transfer Protocol (NNTP) is used for newsgroups. |

|

|

World Wide Web-HyperText Transfer Protocol (HTTP) used for the Web. |

|

|

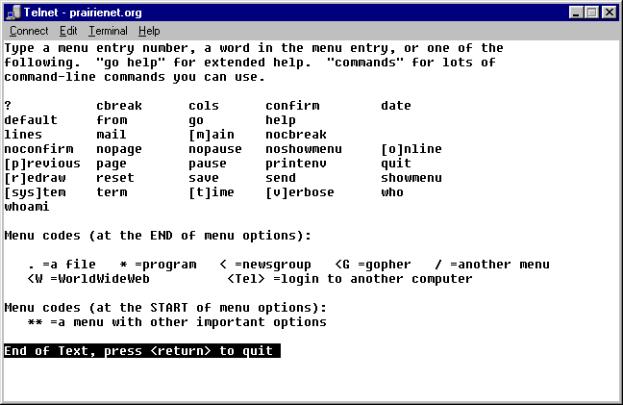

Other Internet services include Telnet, IRC Chat, Archie, and Gopher. Most of these services are available using the Web and e-mail. |

While each of

these services is layered on top of the TCP/IP protocol, they are entirely separate. Originally, these services were

isolated from each other. To download a file, you needed a dedicated FTP

application. To send or receive e-mail, you needed a dedicated e-mail

application. As the Internet and the Web have evolved, these capabilities have

been integrated into Web browsers. This eliminates the need for dedicated

client applications. Figure 1-2 illustrates the types of services that are

currently supported by Web browsers.

Figure 1-2: Multiple Internet

services available via the Web

URL Components and

Functions

A URL is a unique address on the

Internet, similar to an e-mail address. A URL specifies the address of a

server, or a specific Web page residing on a server on the Internet.

A URL is a unique address on the

Internet, similar to an e-mail address. A URL specifies the address of a

server, or a specific Web page residing on a server on the Internet.

A URL also

specifies the transfer protocol.

|

|

The transfer protocol is the method of transferring or downloading information into a browser such as HTTP (for Web pages), FTP (for files), or NNTP (for USENET news). |

|

|

The domain name specifies the address of a specific Web server to which the browser is connecting. Similar to a telephone number, it must be unique. |

|

|

The directory path is the name and directory path of the file on the server being requested by the browser (optional). |

|

|

The filename is the name of the Web page being requested by the browser |

Types of URLs

As explained in the preceding section, URLs vary with respect the selected transfer protocol. The transfer protocol is the method by which information is transferred across the Internet. The transfer protocol determines the type of server being connected to, be it a Web, FTP, Gopher, mail, or news server. Table 1-1 lists the major transfer protocols.

|

Transfer

Protocol |

Server

Type |

URL

Syntax |

|

http |

Web |

http://www.location.com |

|

ftp |

FTP |

ftp://ftp.location.com |

|

gopher |

Gopher |

gopher://gopher.location.com |

|

news |

Newsgroup |

news://news.location.com |

|

mail |

e-mail |

mailto://person@location.com |

|

file |

Local

drive |

file:///c:/directory/filename.htm |

Table 1: Major transfer protocols supported by Web clients

The Domain Name

The domain name

is the Web server address. The domain uniquely defines a company, nonprofit organization,

government, individual, or any other group seeking a Web address. The

traditional way of specifying the server domain name is

http://www.location.com. However, server names do not have to be specified this

way. For example, sometimes the “www” is omitted and the server name is

specified as location.com (or location.net, location.edu, etc.).

The first part of

the domain name is usually the name of the company, person, or organization.

The second part, called the extension,

comes largely from a set of conventions adopted by the Internet community.

Domain Name

Extensions

URLs also vary

with respect to the domain name extensions. Domain names must be qualified by

using one of the following six extensions for sites within the United States.

For sites outside of the United States, country codes are used in place of the

domain extension. Table 1-2 lists the primary domain extensions in use.

|

Domain

Extension |

Description

|

|

.com |

Commercial

business |

|

.net |

Network or Internet

Service Providers (ISP) |

|

.edu |

Educational institution |

|

.gov |

United States Government |

|

.mil |

Military |

|

.org |

Any other organization

(often nonprofit) |

|

us,

uk, de, etc. |

International

country codes |

Table 2: Primary

domain extensions for U.S. based Web site

Directory Path /

Filename

The directory

path is the location of the directory in which the file is located on the Web

server. The directory path is sometimes called the path statement. The filename is the name of the document being

requested by the Web browser. The filename is part of the directory path. The

default filename when entering only the server name is usually index.htm or

index.html.

Internet Port Number

An Internet port

number (also referred to as a socket

number) distinguishes between running applications. In some cases, a port

number may be required and is appended to the server name, such as

http://www.location.com:80 (80 is the default port for Web services). The port

number can usually be omitted and the server’s default port will be used.

The most commonly

used port numbers are:

|

|

FTP—Port 21 |

|

|

Telnet—Port 23 |

|

|

SMTP—Port 25 |

|

|

HTTP—Port 80 |

Assigning Domain

Names

The group in

charge of managing the Domain Name System (DNS) is the Internet Corporation for

Assigned Names & Numbers (ICANN). ICANN is a nonprofit organization, the

purpose of which is to verify that no duplicate domain names are assigned. As

of June 1999, ICANN accredited 42 companies from 10 countries to offer domain

name assignment services. Updated information on ICANN can be obtained from

http://www.icann.org/registers/accredited-list.html

The Internet

Assigned Numbers Authority (IANA) is a voluntary organization that has

suggested some new qualifiers that further differentiate hosts on the Internet

as shown in Table 1-3.

|

Qualifiers

|

Description

|

|

firm |

Business or firms |

|

shop |

Business offerings goods

and services to purchase |

|

arts |

Entities offering cultural

and entertainment activities |

|

web |

Entities offering

activities based on the WWW |

|

nom |

Individual or personal

nomenclature |

|

info |

Entities providing

information services |

Table 3: IANA

suggestions for new qualifiers

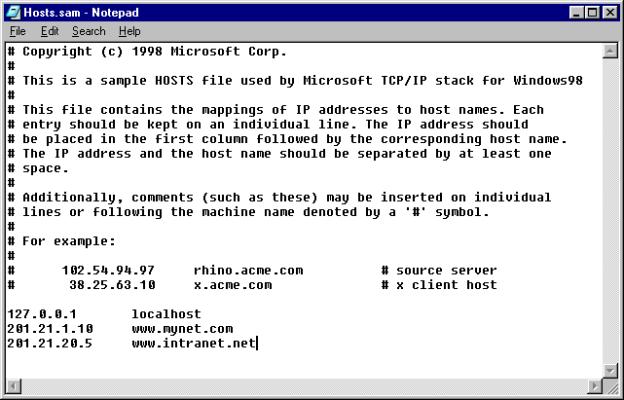

IP Addresses

Each domain name

has a corresponding number assigned to it referred to as an Internet Protocol address, or IP. “IP” is the second part of the “TCP/IP”

protocol.

Just as a domain

name is unique, so is the IP address. IP addresses are what Internet routers

use to direct requests and route traffic across the Internet. IP addresses are

also managed by ICANN.

Domain Name System

The system

designed to assign and organize addresses is called the Domain Name System

(DNS). The DNS, devised in the early 1970s, is still in use today. The DNS was

designed to be user friendlier than IP numbers. Often times, an IP address has

an equivalent domain name. In these cases, a server on the Internet can be

specified using its IP number or domain name. Domain names are much easier to

remember than IP addresses.

Domain names were

created so that URLs could be user friendly and people would not have to enter

the difficult-to-remember IP address. An example of an IP address is

209.0.85.150. This is the IP address that maps to www.comptia.org.

When you enter a

domain name, a special domain name server (a dedicated computer at

your ISP) looks up the domain name from a special file (called a routing table) and directs the message

to the appropriate IP address on the Internet.

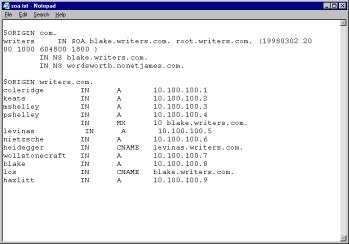

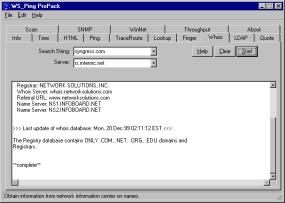

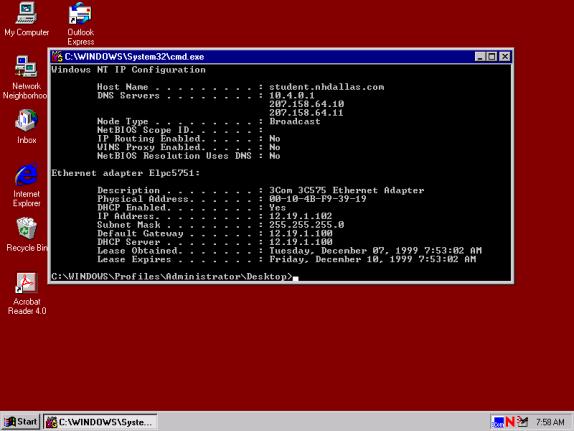

Exercise 1-1:

Converting Domain Names and IP Addresses

In this exercise,

you will access a Web site using both its domain name and its IP address. Given

one of the addresses, you will use a reverse lookup system to convert back and

forth between the two representations.

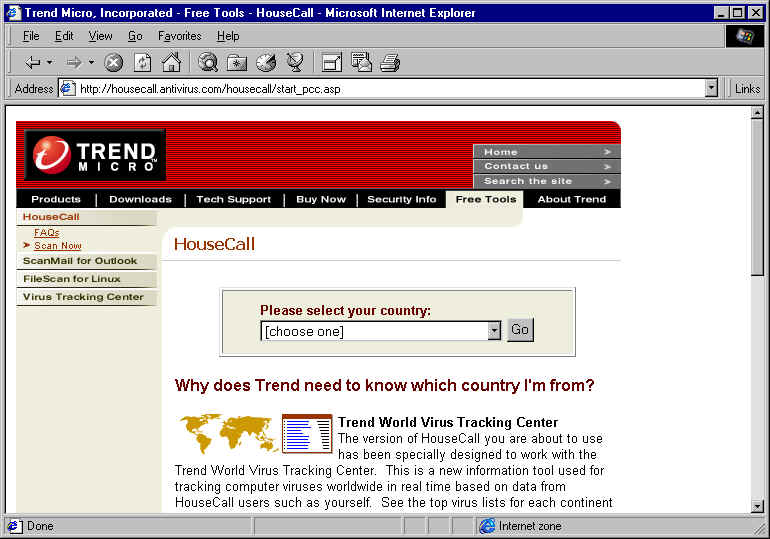

1. Go to http://network-tools.com/ and click on of

the Mirror link sites labeled 1 through 6. Scroll down the page until you see

the input area, shown here:

2. Type the URL www.comptia.org in the Enter Value field, click the Lookup radio button, and press Submit. This is called a reverse domain lookup.

3. Notice the IP address is returned in the text field in place of the domain name.

4. Go to your Web

browser and type the IP address as: http://209.0.85.150/

in the location window, then press Return.

This will take you to the CompTIA Web site. Try typing http://www.comptia.org to verify that this takes you to the

identical location.

5. Go to your Web browser and type the IP address as http://209.0.85.150/ in the location window, and press RETURN. This will take you to the Comptia Web site.

You can also do a lookup on an IP address and it will return the domain name. Feel free to experiment with some of the other services available using http://network-tools.com/.

Internet Site

Performance and Reliability

The performance

and reliability of an Internet or intranet site is critical in order to attract

and retain users. If Web pages take too long to load, the site does not work

reliably, or users are frustrated because they need a superfast connection in

order to view the site, both the users and sponsors of the site will be

disappointed. This next section describes the critical success factors to

creating a high-performance and reliable Web or intranet site.

Internet

Connectivity

Connecting to the Internet may seem like a transparent process to the user, but in order to understand how users connect to the Internet, each of the various communication and interface points must be understood. Data travels from the user’s computer to a remote server, and vice versa. Understanding the path it takes is important to being able to troubleshoot system performance and reliability problems.

Gateways

A gateway is a

device used for connecting networks using different protocols so that

information can be passed from one system to another, or one network to

another. Gateways provide an “onramp” for connecting to the Internet. A gateway

is often a server node with a high-speed connection to the Internet. Most

individuals and smaller organizations use an Internet Service Provider (ISP) as

their gateway to the Internet.

There are two

primary methods of connecting to the Internet: dial-up connections using a

modem, and direct connections. In both cases, you need an ISP. An ISP is

analogous to a cable television company that provides access to various cable

television systems.

Internet Service

Provider (ISP)

Until 1987,

access to the Internet was limited to universities, government agencies, and

companies with servers on the Internet. In 1987, the first commercial ISP went

online in the United States, providing access to organizations and companies

that did not own and maintain the equipment necessary to be a host on the

Internet.

An ISP is your

gateway to the Internet. An ISP maintains a dedicated high-speed connection to

the Internet 24 hours a day. In order to connect to the Internet, you must

first be connected to your ISP. You can obtain a dedicated line that provides a

continuous connection to your ISP (for an additional fee), or connect to the

Internet only when necessary using a modem. Figure 1-3 illustrates a typical

dial-up connection for an end user using an ISP as a gateway to the Internet.

Figure 1-3: Dial-up

connection using an ISP as a gateway to the Internet

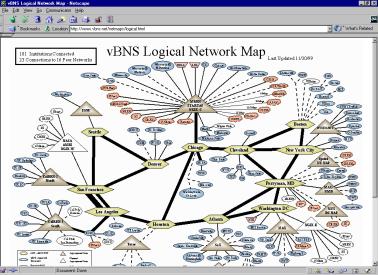

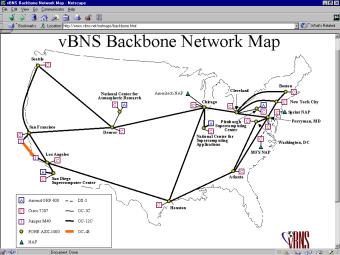

Internet Backbone

The high-speed

infrastructure that connects the individual networks on the Internet is

referred to as the backbone. A

backbone is a high-speed conduit for data to which hundreds and even thousands

of other smaller networks are connected.

Each backbone is

operated and maintained by the organization that owns it. These organizations,

usually long distance carriers, regional phone companies, or ISPs, lease access

to their high-speed backbones to other organizations. The most prominent groups

are ISPs and Telecommunications Companies (TelCos)

Types of Internet

Access and Bandwidth

There are two

primary methods of connecting to the Internet. A dial-up connection using a modem, or a direct connection directly to an ISP or the Internet backbone.

Dial-up connections include analog modems that can operate at a maximum speed

of 56K, and Integrated Services Digital Network (ISDN) connections with a

maximum throughput of 128K a second. Both operate using standard telephone

lines to the Central Office of the Telco.

Direct

connections are carried over high-speed data lines. There is a wide range of

direct connection speeds available. Direct connections can be established at

speeds as slow as 56 Kbps and as fast as 45 Mbps. The higher-speed direct

connections are called T1 and T3 connections. T1 connections operate at 1.5

Mbps. A T3 connection can range in throughput from 3 Mbps to 45 Mbps. T1

connections are very common for business, and T3 connections are used by ISPs

and Telcos.

The main

difference between these two connection methods is speed and cost.

Connection speeds

are measured in either kilobits per second (Kbps) or megabits per second

(Mbps). A megabit (Mb) is a million

bits, and a kilobit (Kb) is a

thousand bits. A megabyte (MB) is a

million bytes, and a kilobyte (KB) is

a thousand bytes. Don’t confuse bits and bytes. One byte = eight bits. Note:

bits are represented by a lowercase “b,” and bytes are shown as an uppercase

“B.” A typical dial-up modem connection operates at speeds of either or 28.8 or

56.6 Kbps. Direct connections are usually measured in Mbps and are many times

faster than dial-up connections.

An advantage of a

dial-up connection is it only requires a standard phone line and a modem, and

is relatively inexpensive. As an alternative to a T1 connection for home and

small business users, others methods such as cable modems and Digital

Subscriber Line direct access are becoming available in most major markets.

These options tend to cost much less than leasing T1 or fractional T1 lines.

With a fractional T1, you are sharing the “pipe” with other users or

organizations, which can have a significant impact on performance.

Internet Site

Functionality

There are many

factors that can influence the functionality of the Internet or a Web site.

Some of these factors are related to the Internet backbone, including network

traffic and congestion; some relate to the quality, reliability, and security

of the hosting site; and still others are related to the design and usability

of a Web site. All of these factors contribute to the overall user experience

when accessing the site.

Performance Issues

In order to

understand performance issues, the entire end-to-end communications

infrastructure must be considered. For example, if users are becoming

frustrated because the access to your company Web site is sluggish, there may

be a variety of reasons for the poor performance.

First, it might

be that their connection speed to the Internet is too slow. Perhaps they are

using an older-generation modem that is slower than 56.6 K. Alternatively,

their ISP may have a slow connection to the Internet that is where the

bottleneck is. Extending out the infrastructure, the problem may be that the

Internet is congested, and response time to your site is slow. This may be the

case during the peak hours during a normal work week.

Finally, the

problem might be on the host or server end of the connection. Issues on this

end could be poor performance related to the bandwidth of an ISP, the speed of

the Internet servers, or the amount of traffic on their site. If a lot of users

are accessing their servers and engaging in a high level of transactions,

performance will slow for all other users. Sometimes security services can be

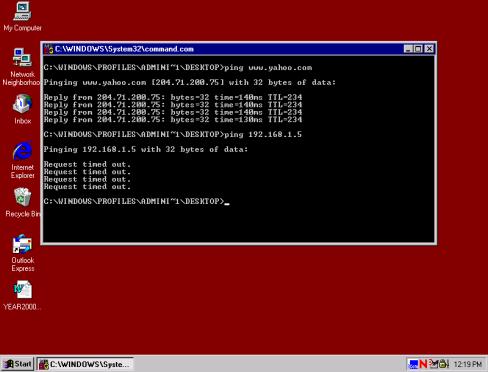

the culprit when running Secure Socket Layer (SSL). In order to troubleshoot

performance, network engineers use a variety of tools such as Ping, Traceroute,

and other proprietary network management tools to isolate performance problems.

Usability and

Audience Access

Another

performance issue that affects overall site functionality is the design and

architecture of your Internet site. It is important to keep in mind that your

site needs to be designed taking into account the access and connection speeds

of your users. You may have a direct

T3 connection to the Internet, and the fastest Web servers available, but if

your users are connecting through a slow dial-up connection, performance can be

significantly degraded.

Always design to

the lowest common denominator if that is your target audience. Alternately, if

you are building an intranet site and all of your users are connecting over a

LAN, you may not be so concerned about performance issues.

On the Job: Before

designing a Web site, you should conduct a user survey and analysis to

determine the range of connection speeds used by customers and end users to

connect to your network. By current standards, the lowest common denominator is

considered to be a 28.8 K or 56.6 K modem connection.

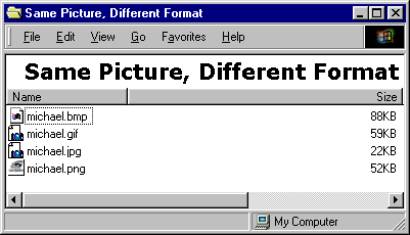

Issues with Embedded

Graphics

One of the

greatest impacts on user performance is the size, format, and techniques used

for embedded graphics. Always strive for fast-loading graphics, whether they be

photographic images, line art, or other graphics.

Performance

issues are often tied to graphics files being too large and taking too long to

download over an ordinary modem connection. This design pitfall can be

addressed by using compression algorithms on the graphic files, scaling the

graphic down in size, selecting the optimal graphic file format, or reducing

the number of colors in an image.

Nothing looks

uglier than a Web page that is supposed to have an embedded graphic file and

instead has a generic file icon showing a question mark. This is usually caused

by a mistake in the HTML document. Either the image file is not where the HTML

document is trying to access it from, or, in some cases, the file may be

corrupted and fails to load, or hangs up while downloading the page. Users

sometimes disable image loading within their browsers if this is impacting

their performance.

One workaround is

to present users with two types of sites: one that is optimized for high-speed

connections and another that is optimized for slower modem connections. The

downside of this approach is that it takes the additional resources to maintain

and update both sites. A clever design architecture can minimize the impact of

maintaining two sites instead of one.

Creating

Fast-Loading Image Files

If your Web pages don’t download quickly, you are likely to lose users. They will become impatient and move on to another site. Adding graphics poses a performance tradeoff.. Does the value of the graphic file justify the increased performance hit when downloading the page? Here are some tips to creating high-performance images”

|

Chapter Summary

This chapter

provided you with an introduction and overview of the Internet. You became

familiar with the origins and evolution of the Internet and the emergence of

internal private networks called intranets.

You were also familiarized with underlying Internet technologies including the

World Wide Web, Web browsers, Web servers, and Uniform Resource Locators

(URLs).

You also learned

about factors that affect Internet / intranet / extranet site functionality and

performance, including bandwidth constraints, customer and user connectivity,

connection access points, and throughput. Last, you learned how e-business

leverages these technologies by linking organizations to their supply chain,

including business partners, suppliers, distributors, customers, and end users

using extranets and the Internet.

Two-Minute Drill

|

|

The Internet is perhaps best described as the world’s largest Interconnected Network of networks. |

|

|

ARPA is an agency within the United States Federal Government formed by the Eisenhower administration in 1957 with the purpose of conducting scientific research and developing advanced technologies for the U.S. military. |

|

|

International standards bodies, including the Internet Engineering Task Force (IETF), the Internet Society (ISOC), and the World Wide Web Consortium (W3C) are leading the process to develop international standards. |

|

|

The idea for the Web is attributed to Tim Berners-Lee of CERN, the European Laboratory for Particle Physics in Geneva, Switzerland. |

|

|

HTML is a Web-based standard that describes how Web documents are displayed in a Web browser or thin client. |

|

|

The basic suite of protocols that allows the mix of hardware and software devices to work together is called TCP/IP. |

|

|

On a Windows-based client, the TCP/IP protocol is implemented through a software device driver sometimes referred to as the TCP/IP “stack.” |

|

|

A URL specifies the address of a server, or a specific Web page residing on a server on the Internet. |

|

|

The first part of the domain name is usually the name of the company, person, or organization. The second part, called the extension, comes largely from a set of conventions adopted by the Internet community. |

|

|

The group in charge of managing the Domain Name System (DNS) is the Internet Corporation for Assigned Names & Numbers (ICANN). |

|

|

A gateway is a device used for connecting networks using different protocols so that information can be passed from one system to another, or one network to another. |

|

|

The high-speed infrastructure that connects the individual networks on the Internet is referred to as the backbone. |

Chapter 2: Indexing and Caching

Web

Caching Increases Network Performance. 3

Web

Cache Communication Protocol

Certification Objectives

|

|

Web Caching |

|

|

File Caching |

|

|

Proxy Caching |

|

|

Cleaning Out

Client-Side Cache |

|

|

Server May Cache

Information As Well |

|

|

Web Page Update

Settings in Browsers |

|

|

Static Index/Site

Map |

|

|

Keyword Index |

|

|

Full Text Index |

|

|

Searching Your

Site |

|

|

Searching Content |

|

|

Indexing Your Site

for a Search |

In this chapter

we are going to look at two important concepts that help make the Internet the

highly functional communication device we know it to be. The first part of the

chapter will cover the concepts and implementations of caching. We will also

cover the end-to-end process of caching, going from the client to the Web

server. In the second half of the chapter, we will examine the various types of

search indexes and methods of utilizing them effectively. In addition to

utilizing other search engines, we will cover topics related to indexing and

searching our own Web site. Finally we will look at Meta tags and how they can

help you configure your Web site for better searching.

Web Caching

Caching is the

process of storing requested objects at a network point closer to the client,

at a location that will provide these objects for reuse as they are requested

by additional clients. By doing so, we can reduce network utilization and

client access times. When we talk about Web objects or objects in general, we

are simply referring to a page, graphic, or some other form of data that we

access over the Internet, usually through our Web browser. This storage point,

which we refer to as a cache, can be implemented in several different ways,

with stand-alone proxy caching servers being popular for businesses, and

industrial-duty transparent caching solutions becoming a mainstay for Internet

Service Providers (ISPs). Within this section, we will focus on different

aspects and implementation issues of Web caching, as well as the methods for

determining a proper solution on the basis of the situation requirements.

Web Caching Client

Benefits

A request for a

Web object requires crossing several network links until the server housing the

object is reached. These network crossings are referred to as hops, which

generally consist of wide area network (WAN) serial links and routers. A

typical end-to-end connection may span 15 hops, all of which add latency or

delay to the user’s session as objects are directed through routers and over

WAN links. If a closer storage point for recently accessed objects is

maintained, the number of hops is greatly reduced between the client and the

original server. In addition to the reduced latency, larger amounts of

bandwidth are available closer to the client; typically cache servers are

installed on switched fast Ethernet networks, which can provide up to 200 megabits

per second data transfer rates. With these speeds, the limiting factor becomes

the link speed between the client and the provider’s network where the caching

server is located. Even though caching servers can benefit users of smaller

networks, the solution tends to be more effective when implemented with a

larger user base. This is due in part to the expiration period of cached items

and the fact that larger user bases exhibit higher degrees of content overlap,

and more users can share a single cached item within a shorter period of time.

Web Caching

Increases Network Performance

Because of the

rising demand for bandwidth and the associated costs, we must find alternatives

to adding additional circuits. A look at the traffic statistics available at

http://www.wcom.com/tools-resources/mae_statistics/ gives us an idea of how

peak times affect network utilization. Web caching allows us as network

administrators and system engineers to reduce bandwidth peaks during periods of

high network traffic. These peaks are usually caused by a large number of users

utilizing the network at the same time. With a caching solution in place, there

is a high likelihood that users’ requests will be returned from cache without

the need to travel over a WAN link to the destination Web server.

Determining Cache

Performance

It is apparent

that caching can provide increases in network performance, reduce latency, and

maximize bandwidth. The question that a network administrator usually faces in

evaluating a current or proposed caching solution is not how, but how much.

Most cache performance analyses are done on the basis of a cache hit ratio.

n (Requests Returned from Cache) / x (Total Requests) = y (Cache Hit Ratio)

This is the

number of requests that are to be retrieved from the server’s cache divided by

the total number of requests. The cache hit ratio is usually expressed as a

percentage, with the higher number representing a better-performing cache.

Caching Server

Placement

Because object

cache is time-dependent, caching becomes more effective as the number of users

increases. The likelihood of 10 users’ sharing Internet objects is fairly small

compared to duplicated object access for 20,000 users. Because of this trend it

becomes necessary to implement caching servers in strategic locations across

the network. These locations are determined by weighing such factors as

available upstream bandwidth, protocol usage, supported client base, client

connection speeds, client traffic patterns, staffing, and server

considerations.

|

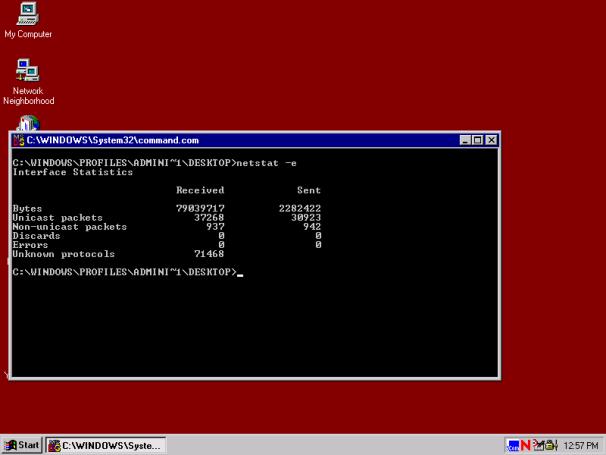

Exercise 2-1:

Hands-On Latency and Bandwidth Comparison Increased

distance between client computers and the origin servers adds latency and

increases the risks of bottlenecks. Most connections will pass over 10 to 20

routers before an end to connectivity is established. These routers along the

network path are referred to as hops. The TCP/IP Trace route utility can

determine the number of hops between your current location and another point

on the Internet. Local caching servers typically are located within two or three

hops of client computers. To use the Trace route utility you must have a

computer running the TCP/IP protocol suite, connected to the Internet (or

Local Area Network), and know the IP address or fully qualified domain name

(FQDN) of the host you wish to trace to. The syntax on a Windows computer is

as follows: Tracert 192.233.80.9 Compare this to your corporate Web server, your ISP’s Web site, or another machine on the LAN. Once

you have seen a comparison of the hops and the Time To Live (TTL) counts for

both Trace route commands, you can now relate the difference by bringing the

two sites up in your browser. Depending on the overall file size of the

viewed pages, you should see a considerable performance difference between

the two visited Web sites. This exercise helps to demonstrate the two main

client benefits for utilizing proxy servers: reduced latency and increased

speed (throughput). |

Passive Caching

Passive caching

represents the most basic form of object caching. Passive caching servers

require less configuration and maintenance, but at the price of reduced

performance. Passive caching, as the name implies, makes no attempt to

“prefetch” Internet objects.

Passive caching

starts when a caching server receives an object request. The caching server will

check for the presence of the requested object in its local cache. If the

object is not available locally, the caching server will request the object

from the location originally specified by the requesting client, this is

referred to as the origin server. If the object is available locally, but the

content is determined stale by examining the Time To Live property (TTL), then

it will also request the object from the origin server. Finally, if the object

is available within the caching server’s local cache, and the content is

considered fresh, then the server will provide the content to the requesting

client directly. After the caching server fulfills the user’s request, the

object is inserted into the server’s local cache. If the disk space allocated for

caching is too full to hold the requested objects, previously cached objects

will be removed on the basis of a formula that evaluates how old the content

is, how often an object has been requested, and the size of the object.

Unlike active

caching, passive caching is performed strictly on a reactive basis. Passive

caching would be a good choice for locations with limited support personnel,

and where the performance gains would not merit the added configuration and

tuning required by active caching.

In addition to

the most popular configuration as stand-alone servers, passive caching servers

can be configured as members of clusters for fault tolerance, and as array

members for performance gains. This is not typically done, because the expense

of additional caching servers is usually justified only when qualified

personnel are available to configure, maintain, and tune complex caching

setups.

Active Caching

Active caching

servers use a proactive approach referred to as prefetching to maximize the

performance of the server’s cache by increasing the amount of objects that are

available locally on the basis of several configurations and a statistical

analysis. If the likelihood that a requested object will be retrieved from a

local cache is increased, performance gains are seen in the overall cache

server process. Active caching takes passive caching as a foundation and builds

upon it with increased performance and enhanced configuration options.

With most passive

caching servers, a object that is retrieved from an origin server is placed in

the cache, and a Time To Live (TTL) property is set. As long as the TTL has not

expired, the caching server can service client requests locally without the

need to recontact the origin server. After the TTL property for the object has

expired, additional client requests will reinitialize the caching process.

Active caching

builds up to this process by initiating proactive requests for specified

objects. Active caching servers can use several factors to determine what

objects to retrieve before any actual client requests are received. These

factors can include network traffic, server load, objects’ TTL properties, and

previous request statistics.

Active caching

helps maintain higher levels of client performance, when clients can access

pages that are stored in a caching server, the transfer speed is increased, and

overall session latency is reduced. With active caching, clients have a higher

cache hit rate, allowing more objects to be returned from a point closer to the

client and returned at a higher speed. Active caching will also check the

objects that are cached locally, and will refresh the objects during off-peak

periods before they expire. This helps to maximize unused network time and

increase the likelihood of returning fresh data.

Caching Related

Protocols

The use of

caching requires the use of a protocol. There are three protocols used in to

caching. These protocols are the Internet Cache Protocol, the Caching Array

Routing Protocol, and the Web Cache Communication Protocol. Each protocol has a

very defined usage as well as pros and cons.

Internet Cache

Protocol

Internet Cache

Protocol (ICP) allows several joined cache servers to communicate and share

information that is cached locally among the servers. ICP is based upon the

transport layer of the TCP/IP stack, utilizing a UDP or connectionless based

communication between the configured servers. With ICP, adjoining caching

servers are configured as ICP Neighbors. When a caching server that is acting

as an ICP Neighbor receives a request and does not have the object available

locally, it will send an ICP query to its ICP Neighbors. The ICP Neighbors will

in turn send replies that will indicate whether the object is available: “ICP

Hit,” or the object is not available: “ICP Miss.” While ICP can improve

performance in a group of caching servers, it introduces other

performance-related issues. Because ICP is required to send requests to each

participating ICP Neighbor for a nonlocally available object, the amount of

network traffic will increase proportionality as the number of joined caching

servers increases. The other downside of utilizing ICP is that additional

requests add to the latency of the users’ session due to the wait period for

ICP replies. ICP servers also tend to duplicate information across the servers

after a period of time. This may seem like a benefit initially; the duplication

of content across cache servers in an array will lower the overall

effectiveness, which will be evident in our cache hit ratio.

Caching Array

Protocol

The Caching Array

Routing Protocol (CARP) provides an alternative method to ICP to coordinate

multiple caching servers. Instead of querying neighbor caches, participating

CARP servers use an algorithm to label each server. This algorithm or hash

provides for a deterministic way to determine the location of a requested

object. This means that each participating server knows which array member to

check with for the requested object if it is not present on the server that

received the request.

In contrast to

ICP-based arrays, CARP-based arrays become more efficient as additional servers

are added. CARP was originally

developed by Microsoft, but has since been adopted by several commercial and

freeware caching solutions.

Web Cache

Communication Protocol

The Web Cache

Communication Protocol was developed by Cisco in order to provide routers with

the ability to redirect specified traffic to caching servers. With WCCP Version

2, the previous limitations of single routers have been replaced with support

for multiple routers. This is important in environments in which the router

introduced a single point of failure. WCCP reads into the TCP/IP packet

structure and determines the type of traffic according to which port is present

in the header. The most common TCP/IP port is port 80, which is HTTP traffic.

This allows the router to forward Web requests to a caching server

transparently while maintaining direct access for other protocols such as POP3

or FTP.

Transparent Caching

Transparent

caching servers require additional complexity and configuration at the gateway

point where the client requests are redirected to the cache servers. Since most

Web protocols are TCP based, redirection happens at the transport layer (layer

4) of the OSI model. Transparent cache clients are unaware of the middleman,

and are limited in their ability to control if the request is returned from the

origin server or the cache.

Single Server

Caching

Single server

caching is the idea that you have one server acting as the caching server. This

is generally seen primary on a small LAN; more then 10 or 12 users will easily

overrun a single cache server.

Clusters

Clusters are

groups of systems linked or chained together that are used in the caching of

information for a company or ISP. By having a cluster of cache servers you can

reduce bandwidth while increasing production time for users, as they do not

have to wait for the page to be retrieved from the Internet.

Hierarchies

Hierarchical

caching occurs when you place a cache server at each layer of your network in a

hierarchical fashion just as you do with routers. Your highest cache server

would be just inside your firewall, which would be the only server responsible

for retrieving information from the Internet. This server or cluster of servers

would then feed downstream to other cache servers such as department, building,

or location cache servers.

Parent-Sibling

The

parent-sibling caching works much like the hierarchies’ caching except that the

sibling caches are all working together. Each sibling cache is aware of what

the other caches are storing and can quickly request from the correct cache

server. If a new request comes in, the siblings will send the request to the

parent cache server, which will either return the requested information or

retrieve it.

Distributed Caching

Distributed

caching is much like proxy clusters. The idea is that you have several proxy

servers working together to reduce the load of retrieving the information from

the Internet. This also acts as a fault tolerance, so that if one of the proxy

serves goes offline the others will automatically be able to respond to the

requests.

File Caching

In addition to

causing HTTP traffic, file transfers that take place across the Internet

consume large amounts of network bandwidth as well. The file transfer protocol

represents a means for a connection-oriented exchange of information between

two systems. In the early days of the Internet, FTP was a mainstay among mostly

Unix-type systems. FTP’s cross-platform communication capability has led it to

become one of the most popular standards for data transfer. Because FTP

sessions tend to involve larger amounts of data than a typical HTTP session,

benefits from caching FTP objects can be substantial.

Using caching for

FTP requests in a large company can reduce request time and bandwidth

dramatically. If 50 employees downloaded a 1MB file each morning, and each

request were made directly to the server housing the file that is maintained in

the corporate office, it would require 50MBof data to be downloaded across the

network, probably via a WAN connection. If a cache server were implemented,

only the first request for the data would be directed to the housing server;

each additional request for the same data would be served by the cache server.

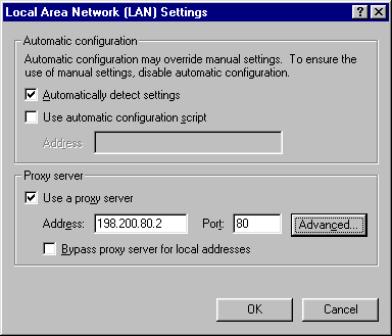

Proxy Caching

Proxy caching

works through a cooperative connection between the browser and the caching

server, rather than between the browser and the remote origin server. When a

client is configured to use a particular caching proxy server (or any proxy

server, for that matter), it directs all of its requests for a particular

protocol (HTTP, FTP, GOPHER, and so on) to the port on the proxy server

specified in the browser configuration. Because several different protocols can

be proxied and cached, most browsers allow for different configurations for

each protocol. For example, we can specify that HTTP (port 80) requests be sent

to our Web-caching server, which could be located at 192.168.0.2 at port 8080.

At the same time we can configure our clients’ Web browsers to send FTP (port

21) requests to our file-caching server located at 192.168.0.3 at port 2121.

This allows for dedicated servers to provide caching for different protocols.

This also allows for better management of network resources; if one server is

responsible for caching all data, it will have to time out more quickly, due to

space requirements.

HTTP requests to

a target Web server, and the browser directs them to the cache. The cache then

either satisfies the request itself or passes on the request to the server as a

proxy for the browser (hence the name).

Proxy caches are

particularly useful on enterprise intranets, where they serve as a firewall

that protects intranet servers against attacks from the Internet. Linking an

intranet to the Internet offers a company’s users direct access to everything

out there, but it also causes internal systems to be exposed to attack from the

Internet. With a proxy server, only the proxy server system need be literally

on the Internet, and all the internal systems are on a relatively isolated and

protected intranet. The proxy server can then enforce specific policies for

external access to the intranet.

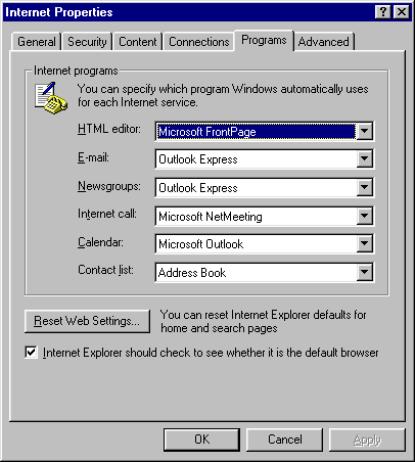

The most obvious

disadvantage of the proxy configuration is that each browser must be explicitly

configured to use it. Earlier browsers required manual user setup changes when

a proxy server was installed or changed, which was a support headache at best

for ISPs supporting thousands of users. Today, a user can configure the current

version of either Navigator or Internet Explorer to locate a proxy without

further user involvement.

Note: Eventually,

browser setup and support will be completely automated. A typical browser will

automatically find whatever resources it needs, including caches, each time it

begins operation. At that time, proxy caches will be completely transparent to

the browser user. Today, however, transparency issues are a key inhibitor to

the use of proxy caches.

Another

disadvantage of the proxy configuration is that the cache itself becomes

another point of system failure. The cache server can crash and interrupt

Internet access to all intranet systems configured to use the proxy for access.

The cache server can become overloaded and become an incremental performance

limitation. To help insure that the server is not overloaded, it should be

running only proxy software and should have large amounts of storage and memory

installed. A high-end processor such as a Pentium III or RISC chip would also

help insure that the proxy/cache servers do not cause a network bottleneck.

Cleaning Out

Client-Side Cache

Up to this point

we have focused on server-side caching. If we focused entirely on server-side

caching we would be ignoring the fact that the most prevalent form of caching

takes place on the client itself. This client cache is designed to reduce the

load times for objects that are static in nature, or dynamic objects that

haven’t changed since the client’s last visit. The client cache stores these

objects locally on the client computer, within memory, and in an allocated

section of hard disk space. Both Microsoft’s Internet Explorer and Netscape’s

Navigator browsers have settings that allow the user to control caching

functions and behaviors. The different settings include the location of the

cache, the size of the cache, and the time when the browser compares the cached

objects to the ones on the remote Web server.

The most

important aspect of client-side caching is that is requires storage space on

the hard disk to work effectively. Once the disk space allocated for caching

has become full, it can no longer work properly. It is for this reason that it

must be emptied periodically to maintain maximum performance.

It is generally a

good idea to periodically clean this cache out or have it set for a lower

number of days so that it will automatically be flushed. By setting the history

to a high number, beyond 14 days, you run the risk of retrieving stale

information from cache, and of not being able to store additional information

in cache if the alotted space is full.

By controlling

the amount of cache being used and the TTL of the cache, you can increase your

performance and decrease the network bandwidth usage drastically, as your

browser will always check the local cache before sending a request out either

to a proxy server or to the orginating server.

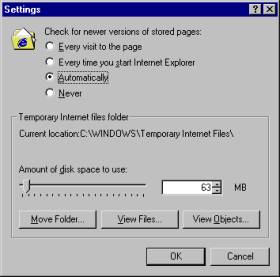

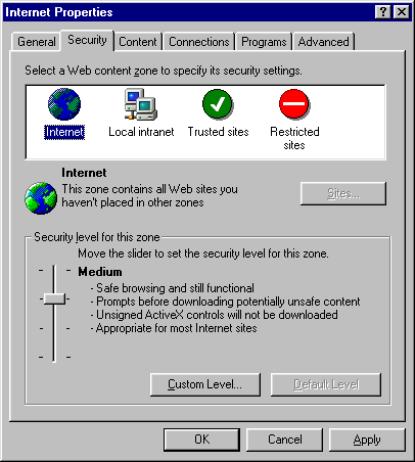

Exercise 2-2:

Internet Explorer 5

- Start Internet Explorer.

- On the Tools menu, click Internet Options.

- On the General tab, in the Temporary Internet Files

section, click Settings (Figure 2-1).

4.

Change the Amount of disk space

to use setting by dragging the slider (Figure 2-2).

- Click OK, and then click OK again.

Figure 2-1: Internet Explorer

5.x—Internet settings

Figure 2-2: Internet Explorer

5.x—Temporary Internet File properties

Internet Explorer

4.0, 4.01

- Start Internet Explorer.

- On the View menu, click Internet Options.

- On the General tab, in the Temporary Internet Files

section, click Settings.

- Change the Amount of disk space to use setting by dragging

the slider.

- Click OK, and then click OK again.

Internet Explorer

3.x

- Start Internet Explorer.

- On the View menu, click Options.

- Click the Advanced tab.

- In the Temporary Internet Files section, click Settings.

- Change the setting by dragging the slider.

- Click OK, and then click OK again.

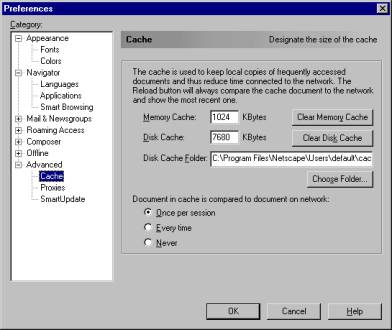

Netscape

Navigator 4.x

- Open the Edit menu and choose Preferences.

- Click the Advanced category and select Cache.

- Click the Clear Disk Cache button and the Clear Memory

Cache button (Figure 2-3).

- Click OK.

Figure 2-3: Preference screen shot

for Netscape 4.x Cache settings

Server May Cache

Information As Well

Web servers are

designed to handle multiple simultaneous requests from Internet clients in the

most efficient matter. Because the pages and objects that a Web server provides

are stored on a hard disk or a disk array, there can be latency experienced

while the information is retrieved. Commonly used information can be stored in

the Web server’s memory, and they can be returned more efficiently without the

added processor utilization and latency from disk I/O. The amount of data that

can be cached on a Web server depends on the amount of physically available

memory. Most Web servers face a performance bottleneck in the area of memory,

which will limit the amount of data the Web server can cache. This is one of

the many reasons that it is prudent to load test and monitor server performance

on a regular basis.

Web Page Update

Settings in Browsers

In the dilemma

caused by the necessity to choose between a transparent caching server and a

proxy-caching server, a significant area of consideration is that of client

configuration. While some large ISPs use proprietary clients, most customers

demand the use of a client of their choice. A proxy client needs to be

configured to direct its Internet requests to a specific proxy server. Early

proxy clients required that the settings be manually entered into each client.

Even with today’s technologies, this would make proxy caching difficult to

implement on a large basis, especially for ISPs. There has been a tremendous

amount of effort expended on developing solutions that provide for the

performance, fault tolerance, and security of proxy caching servers while

reducing the effort to configure clients. This has led to such technologies as

Proxy Automatic Configuration files and the Web Proxy Automatic Discovery

protocol.

Proxy Automatic

Configuration

Proxy Auto

Configuration (PAC) files allow the browser to reconfigure its proxy settings

based on information stored within this file. During the initial client

installation, a URL is supplied to direct the browser to check for updates on a

periodic basis. The code is usually written in JavaScript and stored at a

location within the local area network, or at the remote access point. Both

Netscape and Internet Explorer browsers support this function, making it

feasible to deploy in mixed environments.

The following is

a JavaScript function that determines how the current protocol being used

redirects the browser to the appropriate proxy server. If no appropriate

protocol or proxy server is determined, the client will attempt to establish a

direct Internet connection. This can be extremely useful in a setting where

only certain protocols should be retrieved from proxy servers.

function

DetermineProxy(url, host)

}

if (url.substring(0, 5) == "http:") {

return "PROXY myWebProxy:80";

}

else if (url.substring(0, 4) == "ftp:") {

return "PROXY myFTPProxy:80";

}

else if (url.substring(0, 6) == "https:") {

return "PROXY mySSLProxy:8080";

}

else {

return "DIRECT";

}

Web Proxy Automatic

Discovery Protocol

This protocol

allows a browser to automatically detect proxy settings. Web Proxy Automatic

Discovery (WPAD) is supported through the use of the dynamic host control

protocol (DHCP) and the Domain Name Server (DNS) system. Once the proper

settings are configured, DHCP and DNS servers can automatically find the

appropriate proxy server and configure the browser’s settings accordingly. To

supply the client with the necessary configuration through DHCP, the DHCP

server must support the DHCPINFORM message. If this is not available you will

need to use DNS. WPAD is currently supported only in Internet Explorer 5,

making it a viable solution only in strictly defined networks.

Static Index/Site Map

A static index

allows visitors to your Web site to choose from a list of hyperlinks that will

direct them to the appropriate content. This is very similar to a book’s table

of contents. As webmasters we must insure that we structure our information in

a manner that is easily understood and navigated. Static indexes allow us to

explicitly define where content is located and to assist visitors by placing

these links in a readily accessible area such as the homepage. By defining

these areas of content and placing static indexes, we allow the users to spend

their time more efficiently and encourage them to visit our site again.

Static indexes

need to be layered out in an easy-to-understand manner so that the user will be

able to locate the item or area of choice quickly and easily. A static index

does not change unless Web designer or webmaster updates the page. This does not

allow users to search the site for the content they wish to locate. A good

example of this can be found at http:\\www.snap.com; the tabs across the top

center of the page are a static index that allows you to go quickly to the

section you choose by clicking.

|

Exercise 2-3 Pick two

Internet Sites that you are familiar with, preferably two with similar

content. Analyze how information is being presented, do you have to search,

what happens if you don’t know what you are looking for and just doing

general browsing |

Keyword Index

The amount of

information contained on Web site can be overwhelming. Users wish to locate

what they are looking for quickly. The idea of keywords has become a very

important part of searching a Web page for content. A user can enter a keyword

such as “1957” on a car parts page and be given all the content that has been

marked with “1957.” The following is an example of how keywords are coded into

a page:

<META

NAME="keywords" CONTENT="keywords for your site here">

Depending on the

operating system you are using and the Web server software you have installed,

there are some keywords that are reserved. These are words that are used by the

software for other purposes. The words “and,” “or,” “add,” “Join,” and “next”

are all reserved and are used by the OS or other software. They cannot be used

as part of your keyword list. It is recommend that you make your keywords as

specialized for your page as possible. If you were creating the car parts Web

page used the in the example above, you would not want to use the word “car” as

a keyword, as all your parts would be returned as a good result to the user.

Full-Text Index

A full-text index

stores all the full-text words and their locations for a given table, and a

full-text catalog stores the full-text index of a given database. The full-text

service performs the full-text querying. This allows Index Server to make use

of the structure of the document, rather than just using the raw content. It

indexes full text in formatted data such as Microsoft Excel or Word documents.

Index Server also has the ability to look inside private data formats via the

open standard IFilter interface. Unlike some other systems that are limited to

text files, Index Server can read a variety of document formats.

Index Server is a

great idea for sites containing large amounts of data in documents that a user

might use in research. The program will take each document and record every

word, with the exception of words contained in the noise words list. Noise

words lists are used for words that will appear too many times to be of use for

a search; examples are a company’s name, or words such as “the,” “and,” and

“I.” Researchers do not want to be returned 10,000 results with their search;

they would rather be returned 10.

Searching Your Site

When designing

your Web site you need to keep in mind who will be accessing the site for

information. Languages are written with various characters. Character sets for

the majority of the world are listed in Table 2-1. These character sets include

the normal letters and numbers of the language as well as special characters

(for example, Greek letters WFD would fall

under character set 1253).

Table 2-1: Character Sets Used in

Web Coding

|

Base

Charset |

Display

Name |

Aliases

(Charset IDs) |

|

1252 |

Western |

us-ascii,

iso8859-1, ascii, iso_8859-1, iso-8859-1, ANSI_X3.4-1968, iso-ir-6,

ANSI_X3.4-1986, ISO_646.irv:1991, ISO646-US, us, IBM367, cp367, csASCII,

latin1, iso_8859-1:1987, iso-ir-100, ibm819, cp819, Windows-1252 |

|

28592 |

Central

European (ISO) |

iso8859-2,

iso-8859-2, iso_8859-2, latin2, iso_8859-2:1987, iso-ir-101, l2, csISOLatin2 |

|

1250 |

Central

European (Windows) |

Windows-1250,

x-cp1250 |

|

1251 |

Cyrillic

(Windows) |

Windows-1251,

x-cp1251 |

|

1253 |

Greek

(Windows) |

Windows-1253 |

|

1254 |

Turkish

(Windows) |

Windows-1254 |

|

932 |

Shift-JIS |

shift_jis,

x-sjis, ms_Kanji, csShiftJIS

|

|

EUC-JP |

EUC |

Extended_UNIX_Code_Packed_Format_for_Japanese,

csEUCPkdFmtJapanese, x-euc-jp

|

|

JIS |

JIS |

csISO2022JP,

iso-2022-jp |

|

1257 |

|

Windows-1257 |

|

950 |

Traditional

Chinese (BIG5) |

big5,

csbig5, x-x-big5 |

|

936 |

Simplified

Chinese |

GB_2312-80,

iso-ir-58, chinese, csISO58GB231280, csGB2312, gb2312 |

|

20866 |

Cyrillic

(KOI8-R) |

csKOI8R,

koi8-r |

|

949 |

Korean |

ks_c_5601,

ks_c_5601-1987, Korean, csKSC56011987 |